my research direction

When done well, XAI should help answer these questions:

“Why did the AI output this result for a given input?”

“Which input features or factors contributed the most to this decision?”

“Under what conditions is the AI reliable (or unreliable)?” - this is a big one

“What are the limitations, biases, or risks of using this model in production?” - this is more for others

“How can we debug, audit, or improve the model behavior (especially for fairness / safety)?”

The limitation of XAI is that it cannot scale when we try to trace the path of every neuron. We need to understand why we need that

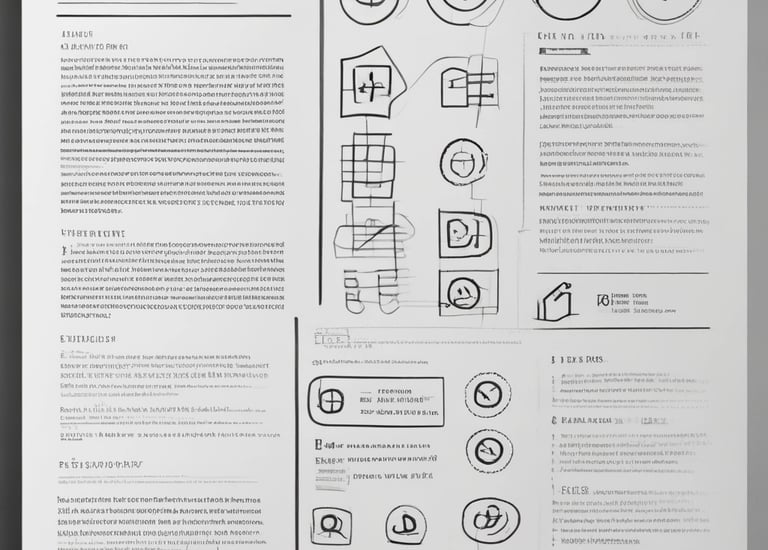

Model Audit

Examining AI behaviors for hidden risks.

Safety Checks

Custom tests to ensure model reliability.

Recon Tools

Developing tools to probe AI safely.

Risk Analysis

Identifying and mitigating AI threats.